How to transform YouTube videos into Searchable Text using LLM and Python

This article delves into the exciting world of audio processing and information retrieval using Python. It outlines a step-by-step guide for creating a program capable of downloading audio from YouTube videos, transcribing the audio content, and generating an indexed vector for that allows queries about the content of the video. The objective of this article is to help demystify the process for enthusiasts and beginners in programming and data science.

An intermediate knowledge of Python is desirable to be able to implement this code and adjust it to specific needs. A OPENAI API-Key is also required.

Ask questions about Youtube videos

Imagine being able to grab your favorite YouTube video, turn its audio into text, and then search through that text easily by asking questions about it. Sounds awesome, right? Well, guess what? You can totally do it, and I’m here to show you how.

In this article, we’re going to explore how to create a Python program that does three things: first, it downloads the audio from any YouTube video you like. Then, it turns that audio into text, and finally, it organizes those words in a clever way so you can efficiently find exactly what you’re looking for.

Step1: Downloading audio from Youtube

I used two main packages here: pytube to access the audio stream from a youtube video, and moviepy to convert this stream to a mp3 file which is then stored.

# if needed install required libraries

# for example: pip install pytube, moviepy

from pytube import YouTube

import moviepy.editor as mp

def download_youtube_audio(youtube_url, output_path):

# Create a YouTube object

yt = YouTube(youtube_url)

# Select the best audio stream

audio_stream = yt.streams.filter(only_audio=True).first()

# Download the audio stream to a temporary file

audio_stream.download(filename='temp_audio.mp4')

# Convert the downloaded file to MP3

clip = mp.AudioFileClip('temp_audio.mp4')

clip.write_audiofile(output_path)

# Remove the temporary MP4 file

clip.close()

youtube_url = "url for youtube video"

# In case you want to enter the video to use directly:

# youtube_url = str(input("Enter the URL of the video you want to

# download: \n>>"))

output_path = 'output_file.mp3'

download_youtube_audio(youtube_url, output_path)Step2: Transcribe audtio to text

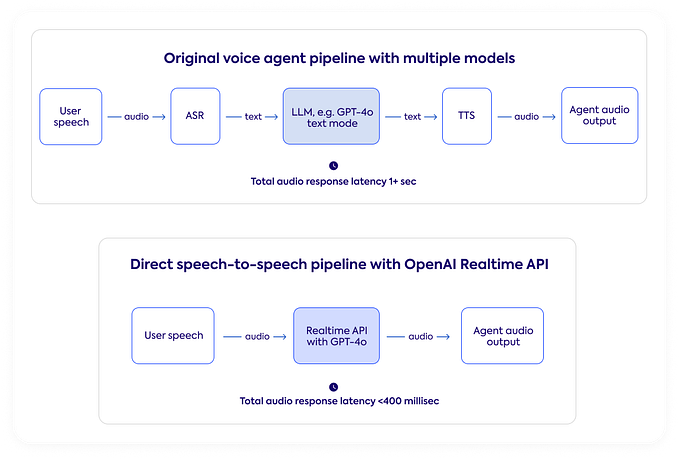

I then transcribed the mp3 file to a txt file using Whisper. For this I used packages openaiopenai to connect to the OpenAI API, and os to allow me to access environment variables.

In order to access the OpenAI API, I obtained API-KEY which I stored with the name ‘OPENAI_API_KEY’ within the “.env” file in the same folder as the python file.

import os

from openai import OpenAI

# read local .env file

from dotenv import load_dotenv, find_dotenv

_ = load_dotenv(find_dotenv())

# Create OpenAI Connection

client = OpenAI()

client.api_key = os.environ['OPENAI_API_KEY']

audio_file= open("output_file.mp3", "rb")

transcript = client.audio.transcriptions.create(

model="whisper-1",

file=audio_file,

response_format="text"

)

with open('transcript.txt', 'w', encoding='utf-8') as f:

# The encoding can be different dependign of the language of

# the video

f.write(transcript)Step3: Create an index from the transcription

The final step is to organize the transcripted text in a way that makes it easy to search and query. This is achieved by creating an indexed vector, which is essentially a structured representation of the transcript. Techniques like tokenization and vectorization, are employed here. Each word or phrase in the transcript is converted into a vector, a series of numbers that represent its position and significance in the text. This indexed vector can then be used to quickly locate specific words or phrases within the transcript, making it a powerful tool for searching and analyzing audio content.

Llama-index provides ways to load data into indexes and to create an index vector. In this case I used the “Unstructured” loader to read a txt file, and the VectorStoreIndexfunction to create the index from this data.

# If needed, download the llama-index package

# pip install llama-index

import os

import openai

# read local .env file

from dotenv import load_dotenv, find_dotenv

_ = load_dotenv(find_dotenv())

openai.api_key = os.environ['OPENAI_API_KEY']

import nest_asyncio

nest_asyncio.apply()

from llama_index import VectorStoreIndex, download_loader

TextReader = download_loader("UnstructuredReader")

loader = TextReader()

documents = loader.load_data("transcript.txt")

index = VectorStoreIndex.from_documents(documents)As a result,index contains the vector that can be used for subsequent searches.

Step4: query the index

After I had the YouTube audio transcribed and indexed, querying the index is like using a search engine for the text. The index is like a big, organized list. It has all the words from your transcribed audio, but instead of being in sentences, they’re stored with pointers indicating where each word is found in the text.

Asking a Question (querying the index) is really asking where can I find this word or phrase in my transcribed text, by using the index as the query engine:

import llama_index

query_engine = index.as_query_engine()

response = query_engine.query("write your question here")

print(response)Llama-index uses by default GPT, so your questions and / or answers can be in any of a number of different languages.

This code can be complemented with a chatbot or come GUI to cater to final users.